Author: Allan S. Nielsen, Ecole polytechnique fédérale de Lausanne (EPFL)

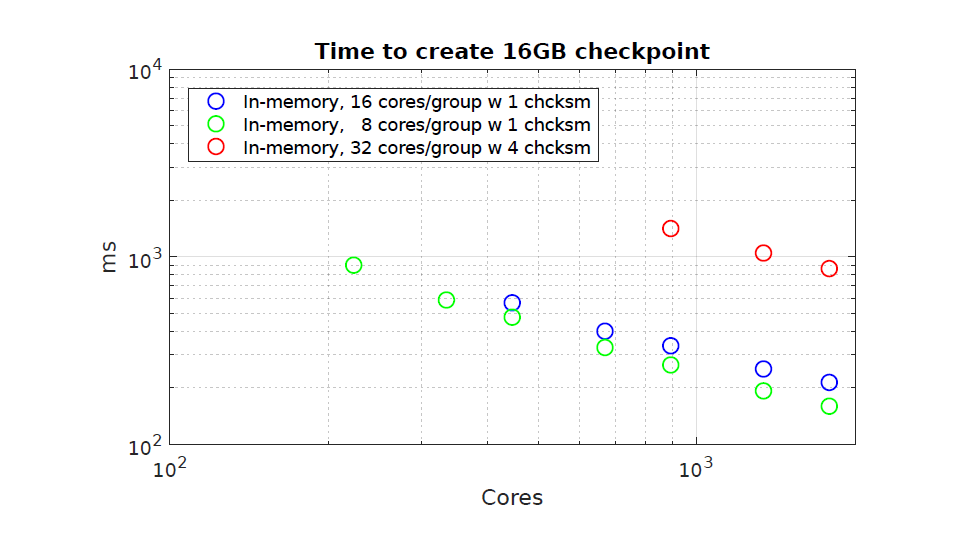

In the quest towards reaching Exascale computational throughput on potentially Exascale capable machines, dealing with faulty hardware is expected to be a major challenge. On todays Petascale systems, hardware failures of various forms have already become something of a daily occurrence.

- Details

- Written by Lena Bühler

- Category: News

- Hits: 3607

Read more: Multi-level Diskless Checksum Checkpointing for HPC Resilience at ExaScale

Author: Carlos J. Falconi Delgado, Automotive Simulation Center Stuttgart e.V.

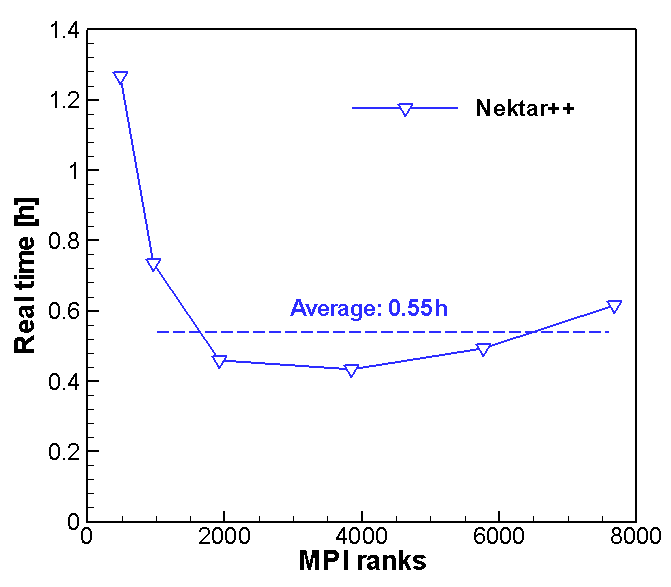

The computational methods which fully take into account the evolution of three-dimensional turbulent behaviors such as LES and DNS require huge computation cost according to the temporal and spatial resolution of those approaches. Using a code with a good scalability behavior would enable more efficient computation in order to meet the fast turnaround times (<36 hours) required from the automotive industry. To find the optimal number of processors for massive computation, the computation time with respect to the number of cores, namely strong scalability test has been carried out.

- Details

- Written by Lena Bühler

- Category: News

- Hits: 4519

Read more: Performance Analysis of an ExaFLOW code with the automotive use case

Author: Jean-Eloi W. Lombard, Imperial College London

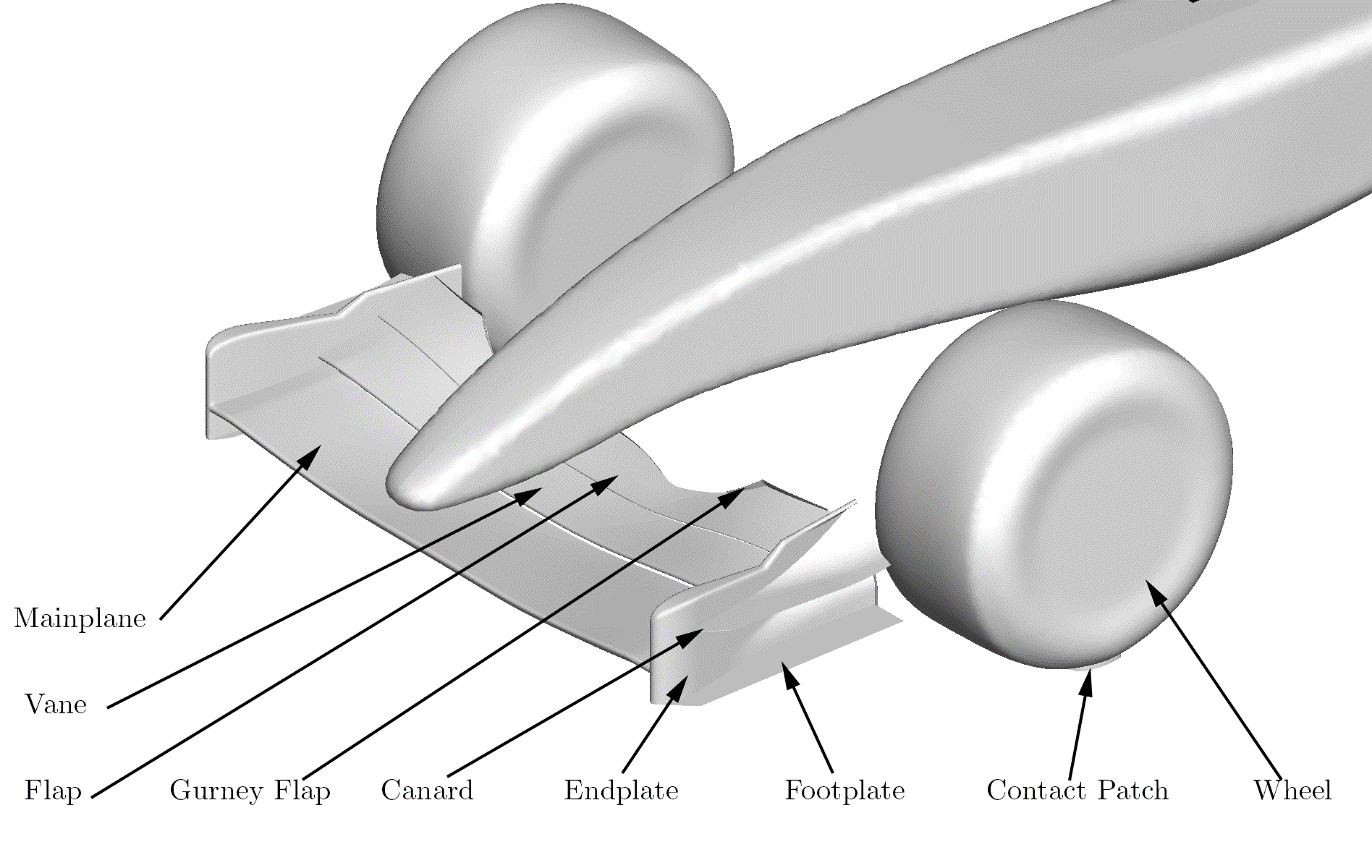

Building on our February 16th post, we at McLaren have been working towards the first comparison between high-order simulations computed with Nektar++ (Cantwell 2016) of the McLaren Front-Wing and PIV data.

- Details

- Written by Lena Bühler

- Category: News

- Hits: 7491

Read more: Comparison between high-fidelity CFD and PIV data for the isolated McLaren Front-wing

Author: Adam Peplinski, KTH Royal Institute of Technology

In our previous blog post we shortly presented implementation of h-type Adaptive Mesh Refinement (AMR) scheme in the Spectral Element Method code Nek5000. At that point only steady state calculation of the nonlinear Navier-Stokes equations were supported. We continue this work extending our implementation to the time dependent problems focusing mostly on a robust parallel preconditioning strategy for the pressure equation, which for the unsteady incompressible flows is the linear sub-problem associated with the divergence-free constraint and can become very ill-conditioned.

- Details

- Written by Lena Bühler

- Category: News

- Hits: 5969

Author: Björn Dick, High Performance Computing Center Stuttgart - HLRS

Besides scalability, resiliency and I/O, the energy demand of HPC systems is a further obstacle on the path to exascale computing and hence also addressed by the ExaFLOW project. This is due to the fact that already the energy demand of current systems accounts for several million € per year. Furthermore, the infrastructure to provide such amounts of electric energy is expensive and not available at the centers these days. Last but not least, almost the entire electric energy is transferred to thermal energy, posing challenges with respect to heat dissipation.

- Details

- Written by Lena Bühler

- Category: News

- Hits: 3775

Read more: Empirically determining energy- and runtime-efficient CPU clock frequencies

Page 3 of 7