Author: Adam Peplinski, KTH

Computational Fluid Dynamics (CFD) relies on the numerical solution of partial differential equations and is one of the most computationally intensive parts of a wide range of scientific and engineering applications. Its accuracy is strongly dependent on the quality of the adopted grid and approximation space on which the solution is computed. Unfortunately for most interesting cases, finding the optimal grid in advance is not possible and some self-adapting algorithms have to be used during simulation. One of them is Adaptive Mesh Refinement (AMR), which allows to dynamically modify both grid structure and approximation space and provides the possibility to control the computational error during the simulation and to increase the accuracy of the numerical simulations at minimal computational cost.

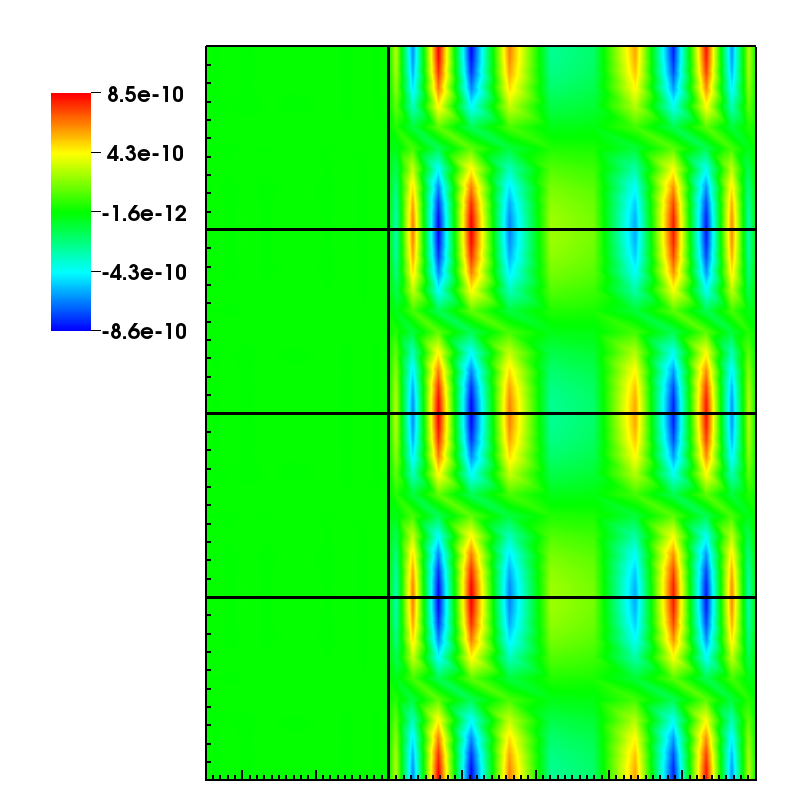

Figure 1: The error of the stream-wise velocity component for the conforming mesh. Black lines show element boundaries.

- Details

- Written by Anna Palaiologk

- Category: News

- Hits: 3750

Author: Christian Jacobs, University of Southampton

ExaFLOW-funded researchers at the University of Southampton have developed and released a new software framework called OpenSBLI, which can automatically generate CFD model code from high-level problem specifications. This generated code is then targetted towards a variety of hardware backends (e.g. MPI, OpenMP, CUDA, OpenCL and OpenACC) through source-to-source translation using the OPS library.

A key feature of OpenSBLI is the separation of concerns that is introduced between model developers, numerical analysts, and parallel computing experts. The numerical analysts can focus on the numerical methods and their variants, while the writing of architecture-dependant code for HPC systems is the task of the parallel computing experts who can support and introduce optimisations for the exascale hardware architectures and the associated backends, once available in the future. As a result of this abstraction, the end-user model developer need not change or re-write their high-level problem specification in order to run their simulations on new HPC hardware.

More details about OpenSBLI can be found in the paper:

C. T. Jacobs, S. P. Jammy, N. D. Sandham (In Press). OpenSBLI: A framework for the automated derivation and parallel execution of finite difference solvers on a range of computer architectures. Journal of Computational Science. DOI: 10.1016/j.jocs.2016.11.001. Pre-print: https://arxiv.org/abs/1609.01277 and on the project's website: https://opensbli.github.io/

- Details

- Written by Anna Palaiologk

- Category: News

- Hits: 3486

Author: Allan Nielsen, EPFL

This year, the 25th ACM International Symposium on High Performance Parallel and Distributed Computing was held in Kyoto, May 31st to June 4th. ExaFLOW was represented by EPFL with a contribution to the Fault Tolerance for HPC at eXtreme Scale Workshop. At the workshop, Fumiyoshi Shoji, Director of the Operations and Computer Technologies Division at RIKEN AIC, delivered an exciting keynote talk on their K-computer and its failures. EPFL and ExaFLOW contributed to the workshop with a talk on Fault Tolerance in the Parareal Method.

- Details

- Written by Anna Palaiologk

- Category: News

- Hits: 4111

Author: Michael Bareford, EPCC

Nektar++ [1] is an open-source MPI-based spectral element code that combines the accuracy of spectral methods with the geometric flexibility of finite elements, specifically, hp-version FEM. Nektar++ was initially developed by Imperial College London and is one of the ExaFLOW co-design applications being actively developed by the consortium. It supports several scalable solvers for many sets of partial differential equations, from (in)compressible Navier-Stokes to the bidomain model of cardiac electrophysiology. The test case named in the title is a simulation of the blood flow through an aorta using the unsteady diffusion equations with a continuous Galerkin projection [2]. This is a small and well-understood problem used as a benchmark to enable understanding of the I/O performance of this code. The results of this work lead to improved I/O efficiency for the ExaFLOW use cases.

The aorta dataset is a mesh of a pair of intercostal arterial branches in the descending aorta, as described by Cantwell et al. [1], see supplementary material S6 therein. The original aortic mesh contained approximately sixty thousand elements, prisms and tetrahedra. However, the tests discussed in this report use a more refined version of this dataset, one that features curved elements of aorta. The test case itself is run using the advection-diffusion-reaction solver (ADRSolver) to simulate mass transport. We executed the test case for a range of node counts, 2n, where n is in the range 1 - 8 on ARCHER [3] in order to generate the various checkpoint files that could then be used by a specially written IO benchmarker.

- Details

- Written by Anna Palaiologk

- Category: News

- Hits: 6574

Read more: Nektar++ Aorta Test Case on ARCHER: Improving I/O Efficiency for ExaFLOW Use Cases

Author: Anna Palaiologk

During the 15th and 16th of September the third all partners project meeting took place in London. The progress of each work package was discussed as well as plans for the upcoming months. Moreover, valuable feedback was received from the Scientific Advisory Board who participated in all sessions of the meeting. After the meeting the partners visited the McLaren Technology Centre.

- Details

- Written by Anna Palaiologk

- Category: News

- Hits: 3401

Page 6 of 7