Author: Dr. Chris Cantwell, Imperial College London

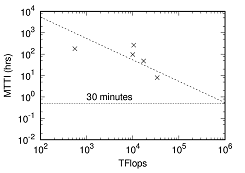

Fluid dynamics is one of the application areas driving advances in supercomputing towards the next great milestone of exascale – achieving 10^18 floating-point operations per second (flops). One of the major challenges of running such simulations is the reliability of the computing hardware on which the tasks execute. With saturation of clock-speeds and growth in available flops now being primarily achieved through increased parallelism, an exascale machine is likely to consist of a far greater number of components than previous systems. Since the reliability of these components is not improving considerably, the time for which an exascale system can run before a failure occurs (mean time to interrupt, MTTI) will be on the order of a few minutes. Indeed, the latest petascale (10^15 flops) supercomputers have an MTTI of around 8 hours.

Algorithms and software therefore need to be developed with resilience in mind and designed to be tolerant of failures when they occur. As part of the ExaFLOW project we have been examining how this might be achieved with computational fluid dynamics, without adversely affecting the performance or scalability of the code, and testing out prototype implementations in Nektar++. One particular concern at exascale as the size of memory per processor, which is currently on a downward trend. We have therefore been seeking solutions which provide resilience in a memory-conservative manner.

Fluid dynamics codes can be broken down into two phases: the initialisation phase in which the discrete differential operator matrices are computed, and the time-integration phase in which an initial condition is advanced in time; the latter is by far the predominant computational cost of large-scale transient simulations of engineering interest. The data for such transient problems can also be split into a static component and a dynamic component. The static component primarily comprises of the matrix systems and does not change during the course of the simulation. In contrast, the dynamic data is that which changes, for example the flow variables.

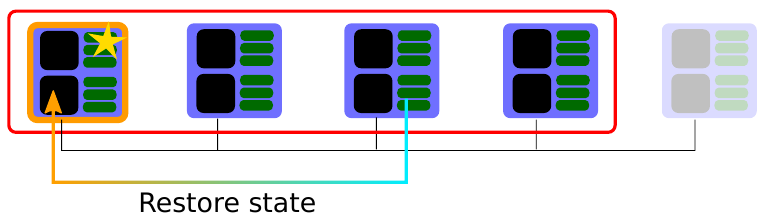

Our prototype approach, now implemented in Nektar++, uses a proposed fault-tolerance extension to the Message Passing Interface (MPI) standard called User-Level Failure Mitigation (ULFM), combined with a memory-efficient approach to represent and duplicate the static data. ULFM provides the critical functionality to not only detect failures among the processes participating in simulation, but to recover from such failures too through transitioning the computation associated with the failed node onto a spare node in the system. Multiple spare nodes consequently provide greater resilience to additional failures. Memory efficiency and performant recovery is achieved through recording only the result of each MPI communication during the initialisation phase; this can then be used to reconstruct the failed process on the spare node entirely independently of other processes, eliminating expensive collective communication. An additional advantage of this approach is that it can be potentially applied in a moderately non-intrusive manner to existing codes. To date we are able to run flow simulations which can recover from as many sequential failures as there are spare nodes, with a memory overhead of only 10%.

While we have an initial working prototype implemented for our incompressible Navier-Stokes solver, further work is needed in a number of areas. In particular, we will be looking to benchmark our prototype on a number of ExaFLOW test cases to assess its scalability on current systems and predict is efficacy and suitability for exascale computing when it arrives.

If you want to find out more about the contents of this article, please contact Dr. Chris Cantwell