Author: Dr. Julien Hoessler, McLaren

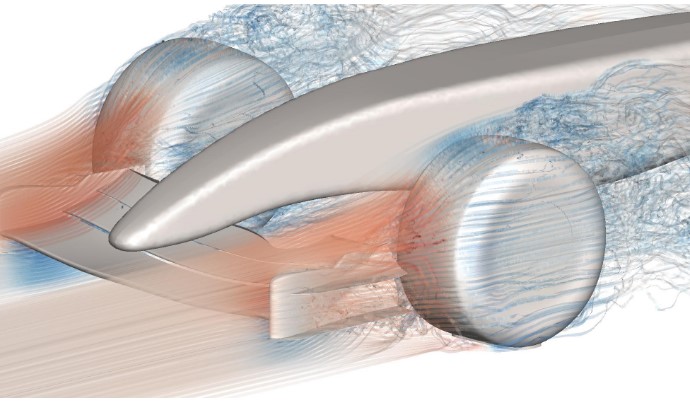

One of our goals here in McLaren as industrial partners is to demonstrate that the algorithms developed within the ExaFLOW consortium will potentially help improve the accuracy and/or throughput of our production CFD simulations on complex geometries. To that end, we provided a demonstration case, referred to as McLaren Front wing, based on the McLaren 17D, and representative of one of the major points of interest in Formula 1, i.e. the interaction of vortical structures generated by the front wing endplate with the front wheel wake.

Figure 1: McLaren Front wing, initial run in Nek++

- Details

- Written by Anna Palaiologk

- Category: News

- Hits: 3482

Read more: Preparation of industrial test cases to benchmark algorithmic improvements in ExaFLOW

Author: Patrick Vogler, IAG, University of Stuttgart

The steady increase of available computer resources has enabled engineers and scientists to use progressively more complex models to simulate a myriad of fluid flow problems. Yet, whereas modern high performance computers (HPC) have seen a steady growth in computing power, the same trend has not been mirrored by a significant gain in data transfer rates. Current systems are capable of producing and processing high amounts of data quickly, while the overall performance is oftentimes hampered by how fast a system can transfer and store the computed data. Considering that CFD (computational fluid dynamics) researchers invariably seek to study simulations with increasingly higher temporal resolution on fine grained computational grids, the imminent move to exascale performance will consequently only exacerbate this problem. [6]

- Details

- Written by Anna Palaiologk

- Category: News

- Hits: 5201

Read more: Data compression strategies for exascale CFD simulations

Author: Martin Vymazal, Imperial College London

High-order methods on unstructured grids are now increasingly being used to improve the accuracy of flow simulations since they simultaneously provide geometric flexibility and high fidelity. We are particularly interested in efficient algorithms for incompressible Navier-Stokes equations that employ high-order space discretization and a time splitting scheme. The cost of one step in time is largely determined by the amount of work needed to obtain the pressure field, which is defined as a solution to a scalar elliptic problem. Several Galerkin-type methods are available for this task, each of them have specific advantages and drawbacks.

High-order continuous Galerkin (CG) method is the oldest. Compared to its discontinuous counterparts, it involves a smaller number of unknowns (figure 1), especially in a low-order setting. The CG solution can be accelerated by means of static condensation, which produces a globally coupled system involving only those degrees of freedom on the mesh skeleton. The element interior unknowns are subsequently obtained from the mesh skeleton data by solving independent local problems that do not require any parallel communication.

- Details

- Written by Anna Palaiologk

- Category: News

- Hits: 4330

Read more: Weak Dirichlet Boundary Conditions and Hybrid DG on Groups of Elements

Author: Christian Jacobs, University of Southampton

Explicit finite difference (FD) algorithms lie at the core of many CFD models in order to solve the governing equations of fluid motion. Over the past decades huge efforts have been made by CFD model developers throughout the world to rewrite the implementation of these algorithms, in order to exploit new and improved computer hardware (for example, GPUs). Numerical modellers must therefore be proficient not only in their domain of expertise, but also in numerical analysis and parallel computing techniques.

As a result of this largely unsustainable burden on the numerical modeller, recent research has focussed on code generation as a way of automatically deriving code that implements numerical algorithms from a high-level problem specification. Modellers need only be concerned about writing the equations to be solved and choosing the appropriate numerical algorithm, and not about the specifics of the parallel implementation of the algorithm on a particular hardware architecture; this latter task is handled by computer scientists. A separation of concerns is therefore created, which is an important step towards the sustainability of CFD models as newer hardware arrives in the run-up to exascale computing.

As part of the ExaFLOW project, researchers at the University of Southampton have developed the OpenSBLI framework [1] ( https://opensbli.github.io ) which features such automated code generation techniques for finite difference algorithms. The flexibility that code generation provides has enabled them to investigate the performance of several FD algorithms that are characterised by different amounts of computational and memory intensity.

- Details

- Written by Anna Palaiologk

- Category: News

- Hits: 3418

Author: Carlos Falconi, ASCS

The 8th regular general meeting of asc(s took place in Leinfelden-Echterdingen on 12th May 2016. The Managing Director Alexander F. Walser reported a positive premium increase of 22% and briefed network members and invited guests on project activities of asc(s during 2015. The Scientific Project Manager Carlos J. Falconi D. presented ExaFLOW to the audience. He gave an overview of all technical objectives to be accomplished during the project period and informed of intermediate results. A discussion with members followed aiming at collecting feedback in regards exploitability of ExaFLOW results. The recently-elected board members of asc(s including Prof. Dr.-Ing. Dr. h.c. Dr. h.c. Prof. e.h. Michael M. Resch from the High Performance Computing Center at the University of Stuttgart, Dr. Steffen Frik from Adam Opel AG, Jürgen Kohler from Daimler AG, Dr. Detlef Schneider from Altair Engineering GmbH and Nurcan Rasig, Sales Manager at Cray Computer Deutschland underlined the importance of transferring know-how and applying achieved project results to more industrial cases.

- Details

- Written by Anna Palaiologk

- Category: News

- Hits: 3118

Read more: ExaFLOW at the 8th annual general meeting of asc(s

Page 5 of 7