ExaFLOW targets clearly defined innovations in the area of exascale computing and CFD, with the aim to enhance the efficiency and exploitability of an important class of applications on large-scale (exascale) systems.

Innovation 1: Mesh adaptivity, heterogeneous modelling, and resilience

Mesh adaptivity will reduce the cost of large-scale simulation through a more efficient use of resources, simplified grid generation and correctly calculated results. A heterogeneous modelling approach combining LES and quasi-DNS has accurately captured turbulent flow physics using only a fraction of the number of grid points required for a full DNS. To prevent losses in performance and efficiency when hardware errors occur, a library for multi-level in-memory checksum checkpointing and automatic recovery has been developed and is currently being tested.

Innovation 2: Strong scaling at exascale through a mixed CG-HDG

This approach will exploit the benefits of next-generation exascale computing resources by allowing individual jobs to be executed efficiently on a larger number of cores than presently possible. This will in turn allow large-scale complex flow simulations to be executed in shorterwall-clock times. Time reductions for execution of high-fidelity simulations will accelerate the design cycle for a range of industrial applications.

Innovation 3: I/O data reduction via filtering

The alleviation of the I/O bottleneck by considerable data-reduction before I/O has been successful for smaller data sets using Singular Value Decomposition (SVD) as a data compression method, which performs feature extraction in raw data. With exascale in mind, this method is currently being tested on large data sets.

Innovation 4: Energy-efficient algorithms

Energy and timing implications of reducing the clock frequency in different phases of Nektar++ have been investigated, highlighting the importance of appropriate parallelism.

- Details

- Written by Lena Bühler

- Category: Uncategorised

- Hits: 8135

The code is available for download and installation from either a Subversion Control Repository or Git.

Links to both repositories are given at: https://nek5000.mcs.anl.gov/

The git repository always mirrors the svn. Official releases are not in place since the nek community users and developers prefer immediate access to their contributions. However, since the software is updated on constant basis, tags for stable releases as well as latest releases are available, so far only for the Git mirror of the code.

The reason for this is that SVN is maintained mainly for senior users who already have their own coding practices, and will be maintained at Argonne National Laboratory (using respective account at ANL); the git repository is maintained at github. A similar procedure is followed for the documentation to which developers/users are free to contribute by editing and adding descriptions of features, and these are pushed back to the repository by issuing pull requests. These allow the nek team to assess whether publication is in order. All information about these procedures are documented on the homepage. KTH maintains a close collaboration with the Nek team at ANL.

The code is daily run through a series of regression tests via buildbot (to be transferred to jenkins). The checks range from functional testing, compiler suite testing to unit testing. So far not all solvers benefit of unit testing but work is ongoing in this direction Successful runs of buildbot determine whether a version of the code is deemed stable.

A suite of examples is available with the source code, examples which illustrate modifications of geometry as well as solvers and implementations of various routines. Users are encouraged to submit their own example cases to be included in the distribution.

The use cases withing ExaFLOW which involve Nek5000 will be packaged as examples and included in the repository for future reference

You can also find on GitHub:

- contributions to the Adaptive Mesh Refinement (AMR) Code. Available in the nekp4est branch awaiting merging to the main branch.

- contributions to the Algebraic Multi-Grid (AMG) codes. Available in the main branch as of commit with hash ending: c6a20a

- The OpenACC port of Nek5000

- Link to ExaGS, a fork of the GS library originally supplied with Nek5000

- Details

- Written by Lena Bühler

- Category: Uncategorised

- Hits: 9731

The SBLI code solves the governing equations of motion for a compressible Newtonian fluid using a high-order discretisation with shock capturing. An entropy splitting approach is used for the Euler terms and all the spatial discretisations are carried out using a fourth-order central-difference scheme. Time integration is performed using compact-storage Runge-Kutta methods with third and fourth order options. Stable high-order boundary schemes are used, along with a Laplacian formulation of the viscous and heat conduction terms to prevent any odd-even decoupling associated with central schemes.

- Details

- Written by Lena Bühler

- Category: Uncategorised

- Hits: 8313

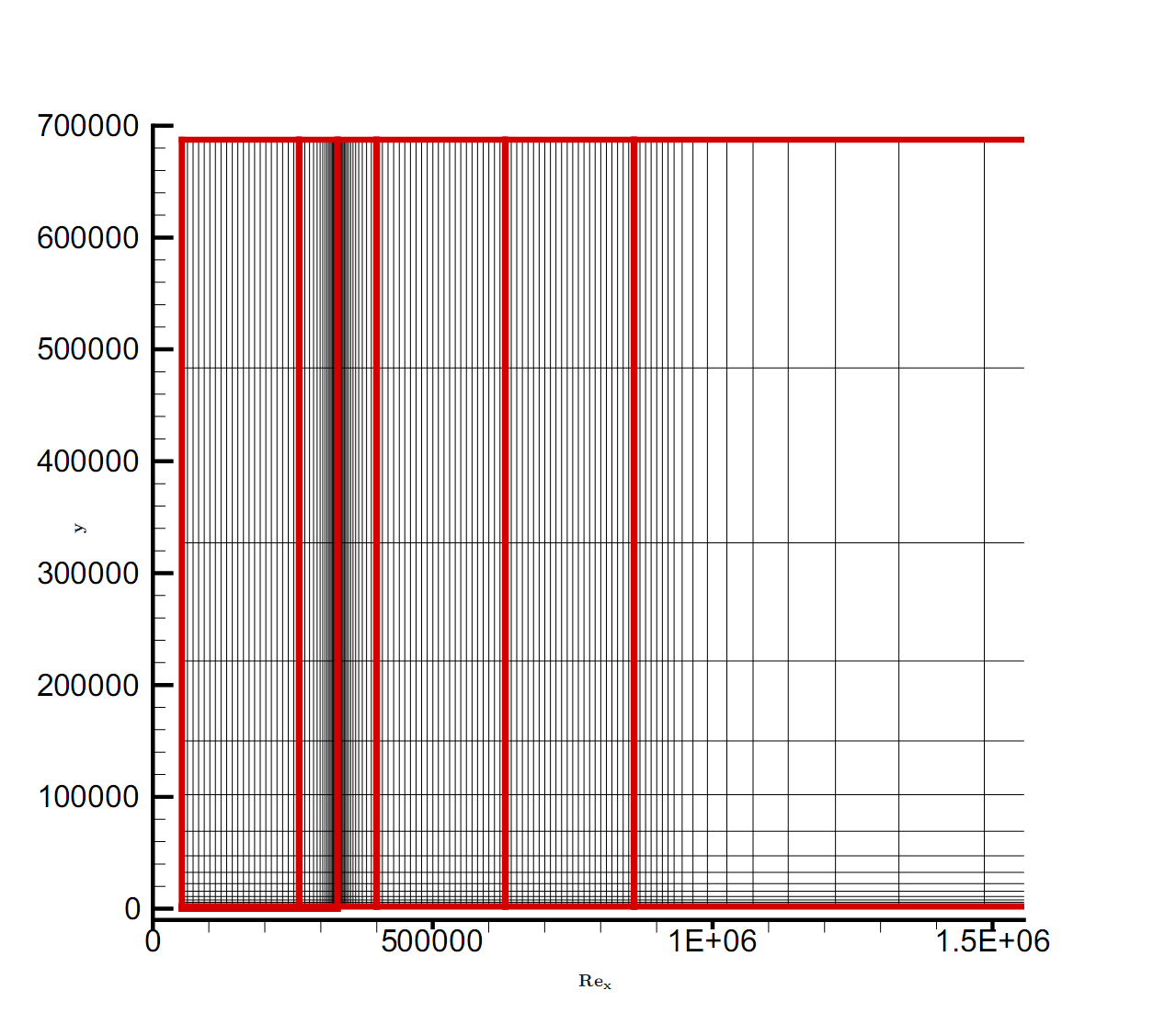

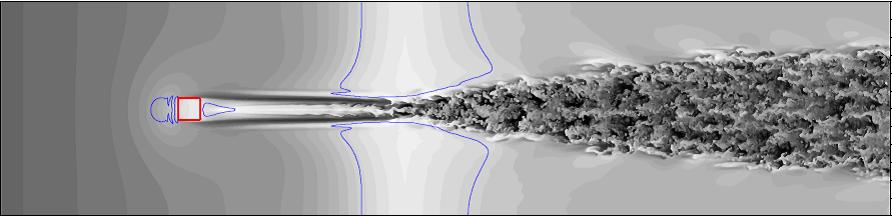

The DNS code ns3d is based on the complete Navier-Stokes equations for compressible fluids with the assumptions of an ideal gas and the Sutherland law for air. The differential equations are discretized in streamwise and wall-normal directions with 6th-order compact or 8th-order explicit finite differences. Time integration is performed with a four-step, 4th-order Runge-Kutta scheme. Implicit and explicit filtering in space and time is possible if resolution or convergence problems occur. The code has been continuously optimized for vector and massive-parallel computer systems until the current Cray XC40 system. Boundary conditions for sub- and supersonic flows can be appropriately specified at the boundaries of the integration domain. Grid transformation is used to cluster grid points in regions of interest, e.g. near a wall or a corner. For parallelization, the domain is split into several subdomains as illustrated in the figure.

Illustration of grid lines (black) and subdomains (red). A small step is located at Rex=3.3E+05.

- Details

- Written by Lena Bühler

- Category: Uncategorised

- Hits: 9045

The code is a tensor product based finite element package designed to allow one to construct efficient classical low polynomial order h-type solvers (where h is the size of the finite element) as well as higher p-order piecewise polynomial order solvers. The framework currently has the following capabilities:

- Representation of one, two and three-dimensional fields as a collection of piecewise continuous or discontinuous polynomial domains.

- Segment, plane and volume domains are permissible, as well as domains representing curves and surfaces (dimensionally-embedded domains).

- Hybrid shaped elements, i.e triangles and quadrilaterals or tetrahedra, prisms and hexahedra.

- Both hierarchical and nodal expansion bases.

- Continuous or discontinuous Galerkin operators.

- Cross platform support for Linux, Mac OS X and Windows.

Nektar++ comes with a number of solvers and also allows one to construct a variety of new solvers. In this project we will primarily be using the Incompressible Navier Stokes solver.

Branch to implementation of the ULFM fault tolerance mechanism

Branch to improvement works to the IO subsystem

- Details

- Written by Lena Bühler

- Category: Uncategorised

- Hits: 11151